Some friends in a coding chat I’m part of were asking about how to get better at AI-driven coding. They were wondering if the issues they were facing stemmed from a skill gap, poor prompts, a lack of understanding of the tools, or if the tools themselves just weren’t that good. It’s a fair question — especially when you see people online doing seemingly incredible things with AI. Are those people just better at prompting, or is there something deeper going on?

Why It’s a Skill Issue and How You Can Improve

I believe it’s a skill issue — not because developers are unskilled, but because this is a new skill to learn. It requires a different way of solving problems. For example, someone can be an excellent engineer, capable of solving complex math and science problems, but still struggle with communication.

I’m often reminded of this video, which I think is a good analogy of communication gaps.

Introducing the Three Key Skills

While AI-driven workflows encompass many factors, there are three core capabilities that stand out:

- Clear Communication

- Structured Planning

- Well-Structured Code Architecture

This is best illustrated by those videos where one person gives another instructions on how to make a peanut butter and jelly sandwich. Each step presents challenges because the precise details required for execution are much greater than what we naturally communicate. Humans are great at reading between the lines and filling in gaps using experience, but AI lacks that intuition. AI requires explicit instructions from start to finish.

Another skill humans often don’t develop but is crucial for AI workflows is structured planning. How many of us start the day with a detailed plan of what we’re going to do, when we’re going to do it, and in what order? And even if we do, how many of us actually stick to that plan? Personally, I don’t — my workflow is more reactive. But for AI, having a well-defined plan is essential. AI follows instructions precisely, so a structured game plan can be a game-changer.

The third skill that’s useful — at least for now — is architecting a well-structured coding project. I’m not sure how long this will remain important, as AI is getting better at it, but currently, understanding good code structure helps guide AI towards better outputs. Often, AI generates long, messy files instead of creating useful abstractions. However, if given the right instructions, it can produce clean, maintainable code. So, knowing what high-quality code looks like and how to prompt AI to generate it is still a valuable skill.

That said, I believe that good communication and structured planning will always be critical skills in this AI-driven era, while code architecture may become less relevant as AI improves.

Applying These Concepts in a Real Project

Now that we’ve established why these three skills are crucial, let’s shift from theory to practice by exploring how they come together in a real-world project scenario.

Recently, I wanted to practice AI-assisted coding for a new project involving three technologies I hadn’t worked with before:

- Medusa 2 — an e-commerce framework

- Anthropic’s MCP servers — using their protocol and TypeScript SDK

- Vercel’s AI SDK — for easier LLM integration and streaming

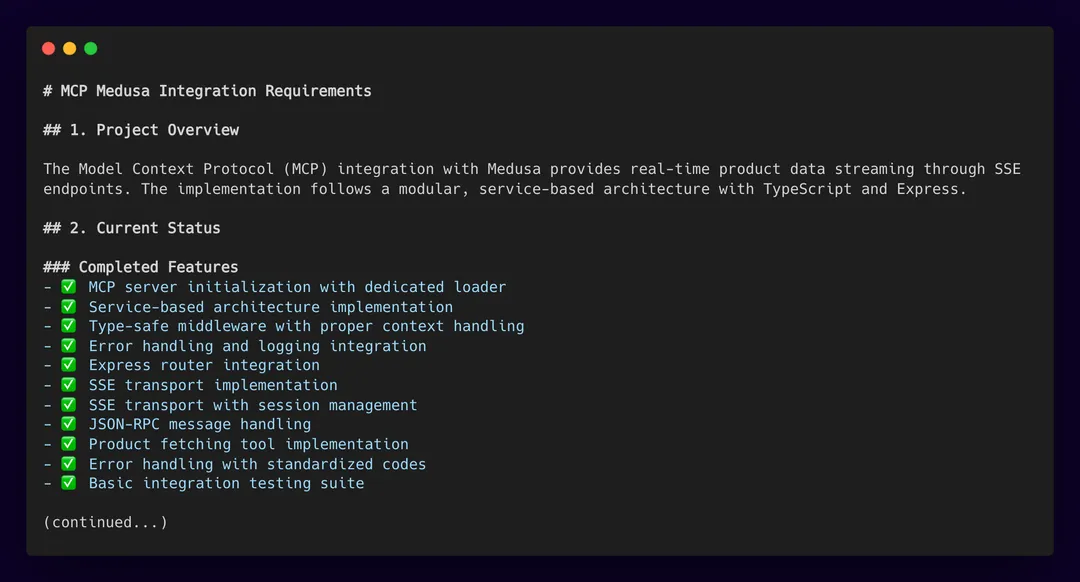

The goal was to build an MCP server that integrates with Medusa 2, allowing an admin to create, update, or delete products.

If you’d like to see a short demo of this prototype in action, check it out here.

Since I was new to these frameworks, my first step was gathering context. I read the documentation for Medusa 2, Anthropic’s SDK, and Vercel’s AI SDK. Then, I indexed these documents into Cursor to make them easily accessible.

From there, I used Cursor’s chat feature to ask about the best approach for building my project and to learn more about the tools. Some useful insights came from unexpected places — like a test file on GitHub that helped me understand Anthropic’s MCP client better than the official documentation.

Once I had enough context, I created an AI folder within my project. I haven’t experimented much with Cursor’s new rules folder structure, but I think using it effectively is probably best practice.

Inside my AI folder, I created:

- An instructions file — outlining what the AI should do (similar to what would go in Cursor’s rules folder).

- A game plan file — a structured breakdown of the project’s steps.

I didn’t write these from scratch. Instead, I copied relevant chat conversations and had the AI generate structured instructions and a game plan. I then refined them slightly to align with my expectations. This meant I spent more time exploring and prompting rather than manually writing everything.

Once those were ready, I had Cursor’s AI agent begin executing the plan. At each step, I paused to review the generated code, ensuring it was clean and understandable. The agent also updated its progress in the game plan.

Managing AI Context Effectively

One issue I ran into was that context length became a problem. As the AI kept logging its progress, the files got too long, making them difficult for Cursor to process.

To manage this:

- I experimented with having the AI leave notes on recent progress, but the files became too large.

- Eventually, I had it just check off completed tasks instead of writing detailed logs.

- If tracking progress in detail, breaking it into multiple files or using a structured folder system might help.

When AI Gets Stuck

Of course, the agent got stuck sometimes. This is where developer experience still matters — at least for now. Understanding how the frameworks work allowed me to troubleshoot when AI struggled. AI can boost productivity, but it’s not replacing senior developers yet.

This approach allowed me to build a working prototype in frameworks I had never used within hours. My proof of concept was ready in just a few hours, and a functional prototype was built in under ten hours of coding.

AI-Generated Tests

Another thing that helped was writing tests using Jest — something I haven’t done much in my career but found incredibly useful here, as it directly ties back to structured planning and clear communication. AI-generated tests helped validate that my MCP server was returning the correct responses, ensuring everything worked as expected. They also help provide a feedback loop for the agents to iterate and improve off, which is helpful for the agent to correct itself when encountering issues.

This reinforced that having AI write tests is valuable — it helps deliver code with confidence while letting you focus on development.

Key Takeaways

If there’s one thing to remember from this process, it’s this:

- Start with chat, not an agent — If the task is complex, discuss it in chat first. Validate your ideas before jumping into execution.

- Have AI generate structured instructions and a plan — Then copy those over to an agent.

- Use documentation smartly — Before tackling a problem, have the AI extract structured steps from the docs. This gets it thinking step by step before coding.

- Manage context effectively — Too much context can cause issues, so focus on what’s essential for each step.

- Use AI-generated tests — They help catch issues early and ensure reliability.

Small AI Tricks That Help

Some developers don’t realize that both the chat and agent can handle screenshots. For example, when working on a UI change, I took a screenshot and explained the issue visually. This made it easier for the AI to understand and implement the fix.

Final Thoughts

These skills — prompting, planning, and structuring workflows — may seem small, but they dramatically impact productivity. AI-driven development is a new paradigm, and like any new skill, it takes time to master. If you’re struggling, don’t be discouraged — it’s just a different way of working. Remember to apply Clear Communication, Structured Planning, and Well-Structured Code Architecture at every step of your AI journey, and the more you practice, the better you’ll get.