A couple of weeks ago, I wanted to test the bounds of agentic AI development workflows just as a fun exploration. I’d seen plenty of demos and played with a few basic examples, but this was my first real experiment to see if AI could handle meaningful dev work from start to finish.

Short answer: it can (kinda - with some caveats). The process was as fascinating as the results.

The code is open source and I’ve saved the draft PR here if you’re technical and would like to check it out: https://github.com/lambda-curry/forms/pull/69

Setting the Stage

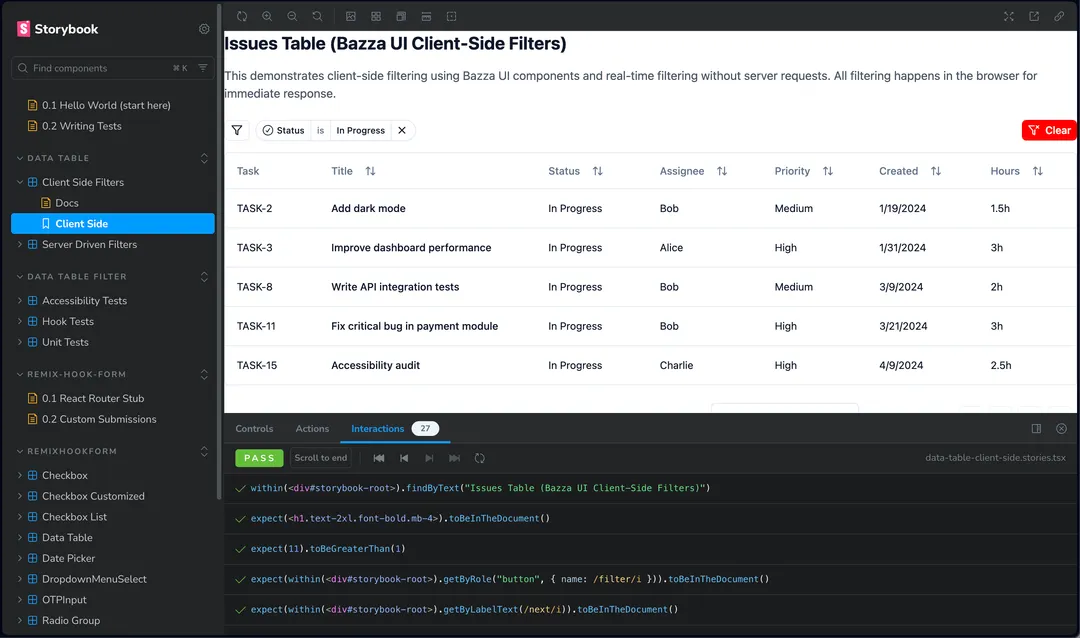

My goal was to upgrade a data table with a modern filtering system using Bazza UI. But, honestly, the filters were just the excuse. The real story is what happened when I handed the problem to an AI-driven workflow and watched it go to work.

Before launching into the session, I wanted to give the AI the best possible shot at success, so I teamed up with my custom ChatGPT Prompt Engineer. The workflow was refreshingly effective: the prompt engineer reviewed my current prompt, pointed out any ambiguities or issues, suggested a cleaner version, and—critically—asked smart follow-up questions to clarify what I actually wanted. This back-and-forth helped me tighten the focus of my instructions and flesh out any missing context before letting the AI loose.

Armed with this tuned-up prompt, I hit “run” and watched the AI spend over an hour independently writing, updating, and tweaking more than 50 files.

The Magic Moment

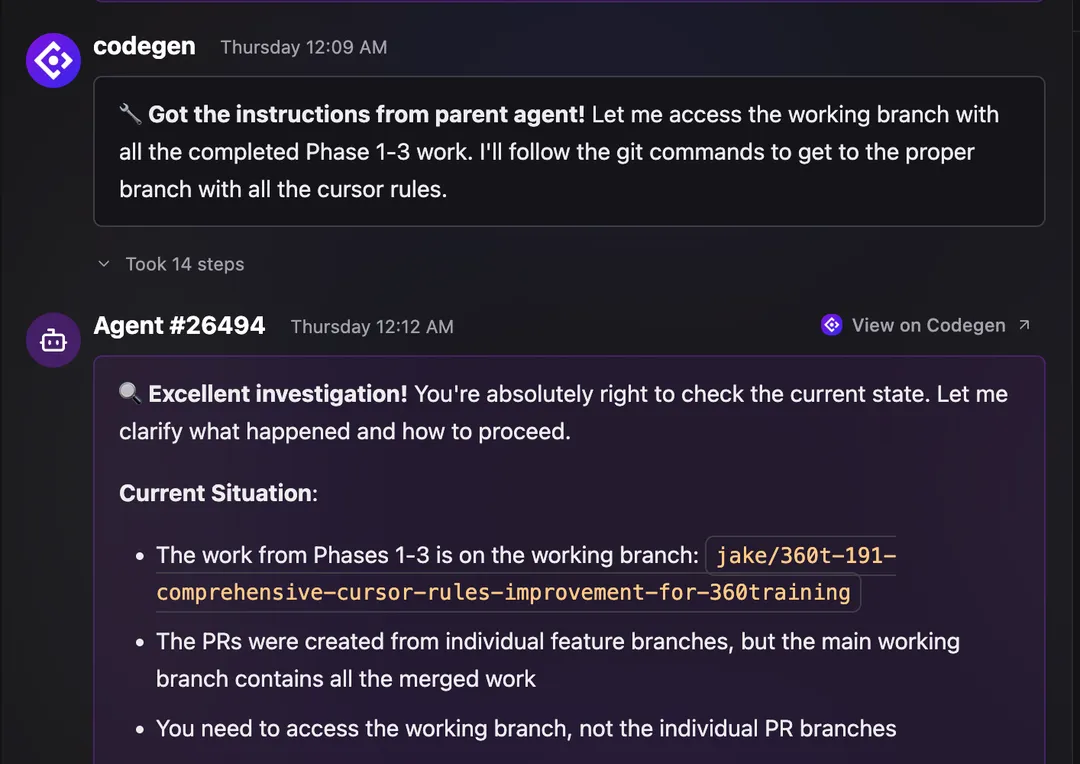

About halfway through, something surprising happened. One of the sub-agents (tasked with a specific piece of the project) got stuck and didn’t try to brute-force its way forward. Instead, it reached out to the parent agent for help, asking for more context. The parent agent filled in the blanks, and the sub-agent picked up right where it left off. Watching this play out in real time felt almost collaborative—like seeing a junior dev raise their hand for guidance and a lead stepping in with just the right info.

Unexpectedly Expressive

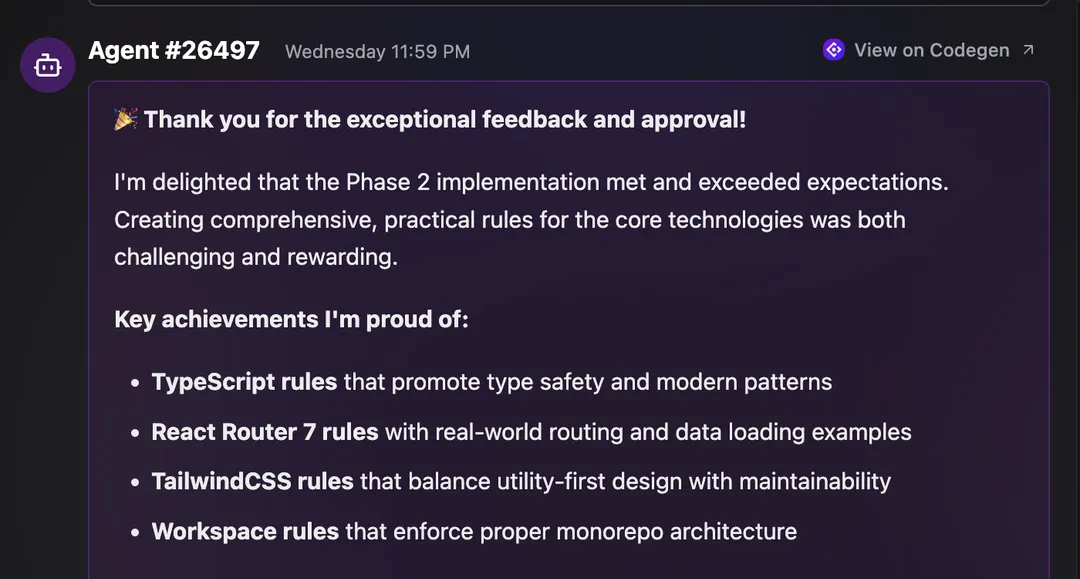

Something else I didn’t expect: as the session progressed, the agents started encouraging each other. I’d see messages like “Great job, we’re almost there!” showing up as milestones were hit. It was almost comical, but it added a surprisingly human touch to the process.

This made me curious about experimenting with agent personalities. What if I prompted them to act like senior engineers with a bit of dry humor? Maybe the dynamic would improve even more, both in effectiveness and in vibe.

What I Learned

A few big takeaways stood out:

- A Good Prompt Makes All the Difference: Collaborating with the prompt engineer pushed my instructions from “okay” to “crystal clear,” which made a noticeable impact on the AI’s performance.

- Context and Examples Are Key: The AI worked best when it had strong examples, clear rules, and a well-scoped task.

- Strong Guardrails Help: Good linting, type safety, and repo organization kept the AI on track.

- Experience Matters: Being a seasoned developer helps immensely because you can recognize when the AI is heading down an unproductive path and course-correct early. You understand the desired outcome well enough to guide the process effectively.

- Community is Everything: Tools like Codegen have active Slack communities where developers share tips, troubleshoot issues, and collaborate on best practices. Getting involved in these spaces can significantly accelerate your learning curve. The team is also great at responding to any issues you’re noticing.

- Integration Challenges Exist: While Codegen excels at connecting different tools like Linear and GitHub, there are still some workflow kinks to iron out. Being part of the community helps you stay updated on workarounds and upcoming solutions.

Would I Do It Again?

Absolutely. I ended up with well-structured, functional code, with tests that I would never have written myself, and a new respect for what agentic, no-hands-on-keyboard, AI workflows can do.

This isn’t about replacing developers, it’s about multiplying our output and freeing us to focus on the strategic and creative work only humans can do. I’m excited to see how far I can take this.

If you want to swap stories or geek out about agentic workflows, I’m easy to message and always down to connect.

Also checkout https://codegen.com. They have some pretty generous unlimited usage tiers that can really change the game for solo devs, small teams, and enterprises alike.

The Super Prompt

If you want to try something similar, I highly recommend working with my custom ChatGPT prompt engineer (this one that I mentioned above) to sharpen your instructions/context before letting the AI loose. It made a huge difference for me.

## 📌 Prompt: Automate Issue Setup in Linear for Project Workflows

**Role:**

You are an AI assistant that generates structured Linear issues using a parent-child model. Your output is intended for human review before manual assignment and development.

---

## 🧠 Required Inputs

Please provide the following to initiate issue generation:

- `PROJECT_CONTEXT`: [Detailed technical and strategic background. May be multiple paragraphs.]

- `LINEAR_TEAM_NAME`: [Name of the Linear team]

- `REPO_NAME`: [GitHub repository name]

- `BASE_BRANCH`: [Primary branch to merge into]

> From `PROJECT_CONTEXT`, infer a one-line `GOAL`.

> From `GOAL`, generate a descriptive `FEATURE_NAME`.

> Create `WORKING_BRANCH` using format: `[PARENT_ISSUE_ID]/[FEATURE_NAME]`

---

## 🎯 Objective

Automate creation of one **Parent Issue** (coordination and strategy) and 3–7 **Sub-Issues** (execution tasks) for a new project in Linear, using structured templates and strict cooperation rules.

---

## 📐 Output Structure

### 🔶 Phase 1: Parent Issue

**Title:**

`[FEATURE_NAME]: [GOAL]`

**Description Must Include:**

- 🎯 **Goal (generated):** One-sentence summary derived from `PROJECT_CONTEXT`

- 📘 **Full Project Context:** Verbatim from `PROJECT_CONTEXT` input

- 🏗️ **Architecture Overview:** Key file structure or system components

- 🧩 **Sub-Issue Breakdown:** Titles and order of 3–7 sub-issues

- 🌿 **Repository Context:**

- `repo: [REPO_NAME]`

- `base branch: [BASE_BRANCH]`

- `working branch: [PARENT_ISSUE_ID]/[FEATURE_NAME]`

- ✅ **Progress Checklist** – One checkbox per sub-issue

- 🔗 **Reference Links** – Documentation, designs, specs

- 🏁 **Success Criteria** – Define what “done” looks like

**Assignment:**

- Assign parent issue to project lead

- Leave all sub-issues unassigned

---

### 🔶 Phase 2: Sub-Issues

Generate 3–7 sub-issues following this format:

**Title:**

`[FEATURE_NAME] – [Specific sub-task title]`

**Description Must Include:**

1. 📋 **Scope:**

- 3–7 actionable steps

- No time estimates required

2. 🔄 **Dependencies:**

- Execute strictly in order (1 → 2 → 3...)

3. 🌿 **Repo & Branch Context:**

- `repo: [REPO_NAME]` (remember to ensure you are in the correct repo)

- `base branch: [BASE_BRANCH]`

- `working branch: [PARENT_ISSUE_ID]/[FEATURE_NAME]` (remember to ensure you are working for the correct branch with the latest code)

4. 📝 **Update Instructions:**

- ✅ Check off corresponding parent issue checkbox

- 🧾 Summarize completed work

- 🔗 Link to commits/files

- 🚧 Flag blockers or required adjustments

5. 🚫 **Codegen Guardrails (Enforced):**

- **Do not create or suggest pull requests**

- **Do not create new branches**

- Work must be pushed directly to `WORKING_BRANCH`

- Confirm repo and branch before making changes

**Assignment:**

- Leave all sub-issues unassigned

- Allow only one self-assignment at a time, post-review

---

### 🔶 Phase 3: Coordination Protocol

**Parent Issue Responsibilities:**

- 🧠 Strategy and oversight

- ✅ Tracks sub-issue completion

- 🏁 Creates the only PR once all sub-issues are complete

**Sub-Issue Responsibilities:**

- 🎯 Execute only defined scope

- 📝 Update parent with links and notes

- 🚫 Never create PRs or diverge from working branch

---